Introduction

ARC2 Proxy and NGINX are versatile software solutions that offer similar capabilities and can be used interchangeably in various scenarios. Both support features like serving cached responses, which are crucial for improving the performance and scalability of web applications. In this round of testing, we aim to evaluate their efficiency in serving a cached "Hello World!" response.

By conducting performance tests under identical conditions, we aim to highlight any differences in response times, resource utilization, and scalability between ARC2 Proxy and NGINX. This comparative analysis will assist developers and system administrators in making informed decisions when selecting the most suitable proxy server for their specific requirements.

Features to be tested in this round

- Serve a cached "Hello World!" response

Setup

Tests will run on a consumer-grade computer with a Linux-based OS and with the following specifications:

- Kernel Version:

6.11.3-200.fc40.x86_64 (64-bit) - Processors:

12 × Intel® Core™ i5-10400 CPU @ 2.90GHz - Memory:

23.3 GiB of RAM - Linux Distribution:

Fedora Linux 40 - KDE Plasma Version:

6.2.0 - ARC2 Proxy Version:

oxyredox-v2/2.1.29 - NGINX Version:

nginx/1.26.2

For benchmarking, K6 will be used with the following configuration:

import http from 'k6/http';

import { sleep } from 'k6';

export const options = {

stages: [

{ duration: '5s', target: X },

{ duration: '30s', target: X },

],

insecureSkipTLSVerify: true,

};

export default function () {

http.get('http://localhost/');

sleep(1);

}

At the start, there will be a 5-second ramp-up to X VUs so that we can guarantee resource allocation and smooth expansion.

The configuration of ARC2 Proxy:

listening_address = "0.0.0.0"

listening_port_http = 80

listening_port_https = 443

logging_level = "error"

add_caching = true

add_rate_limiting = false

add_logging = false

disable_default_body_limit = false

add_sql_injection_protection = false

[[proxy_rules]]

domain = "localhost"

max_age_seconds = 3600

paths = ["/"]

forward_ipv4 = "127.0.0.1"

forward_port_http = 81

rule_type = "Whitelist"

ignore_query_string = false

enable_logging = false

enable_sql_injection_protection = false

Note that in total ARC2 Proxy will run with 4 workers active (not present in this configurations file).

The configuration of NGINX:

worker_processes 100;

events {

worker_connections 4096;

}

http {

include mime.types;

default_type application/octet-stream;

proxy_cache_path /opt/nginx keys_zone=mycache:60m;

sendfile on;

keepalive_timeout 65;

server {

listen 79;

server_name localhost;

proxy_cache mycache;

proxy_cache_valid any 60m;

location / {

proxy_pass http://127.0.0.1:81;

}

}

}

A Rust Actix-web-based test server is running on port 81.

The results

For 100 VUS

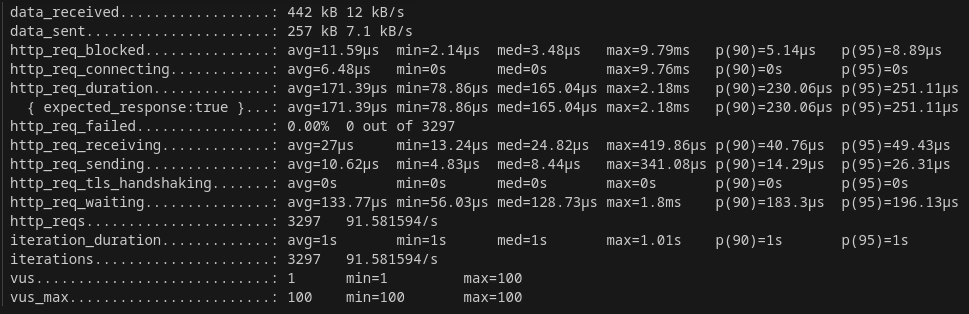

NGINX

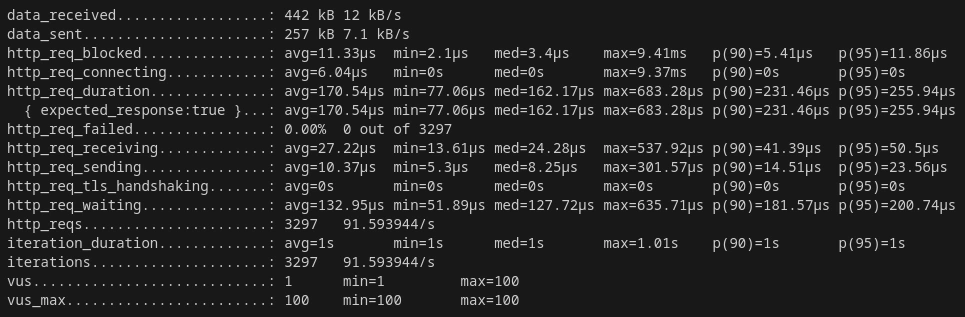

ARC2 Proxy

Reference Table

| Program | Request Latency | Delta | Total Requests |

|---|---|---|---|

| ARC2 Proxy | 170.54 μs | 0.4959% | 3297 |

| NGINX | 171.39 μs | - | 3297 |

For 1000 VUS

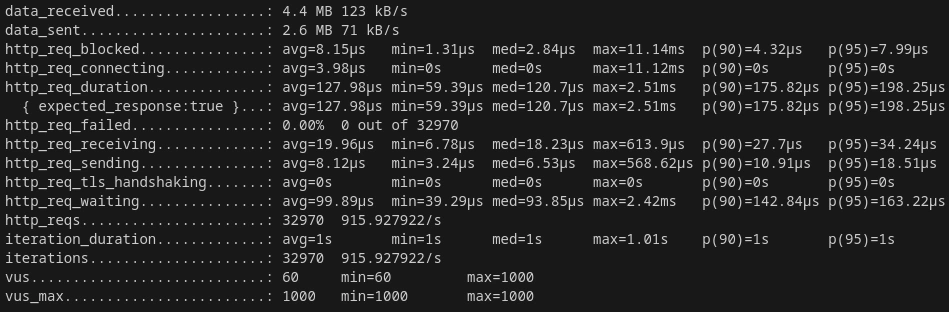

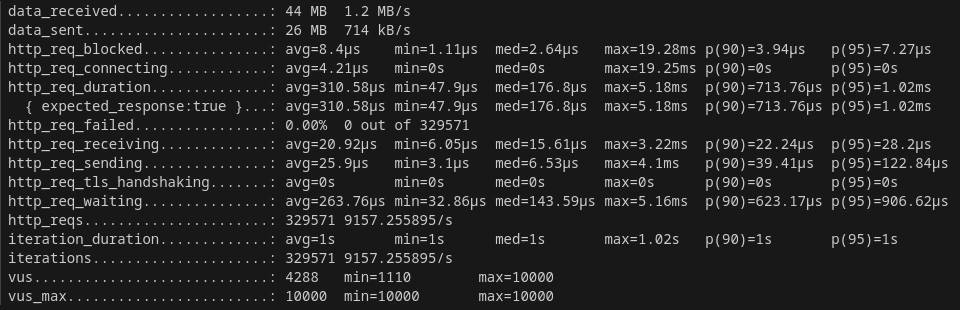

NGINX

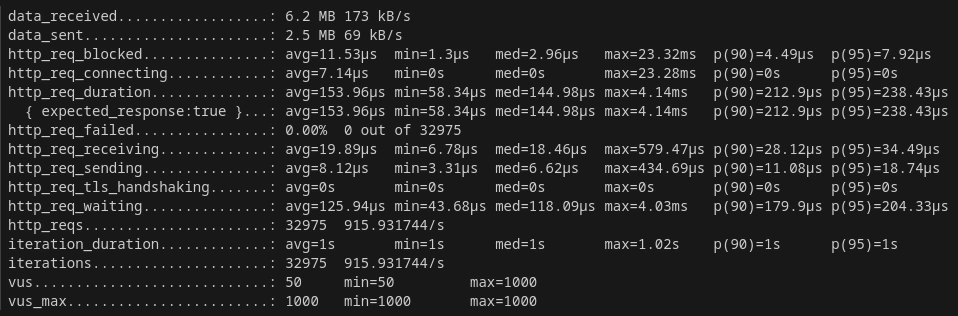

ARC2 Proxy

Reference Table

| Program | Request Latency | Delta | Total Requests |

|---|---|---|---|

| ARC2 Proxy | 153.96 μs | - | 32975 |

| NGINX | 127.98 μs | 16.8745% | 32970 |

For 5000 VUS

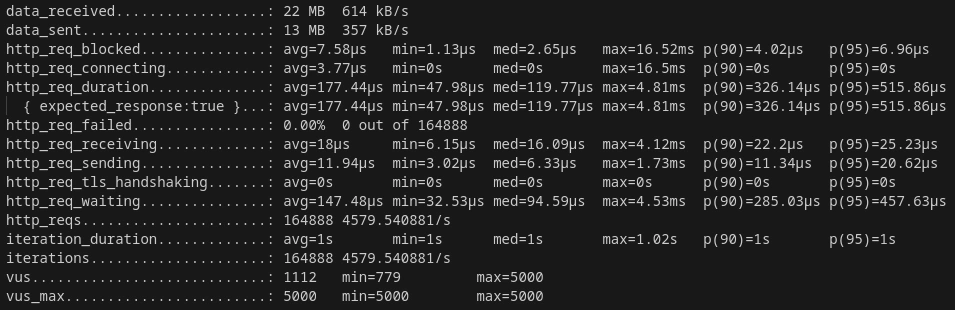

NGINX

ARC2 Proxy

Reference Table

| Program | Request Latency | Delta | Total Requests |

|---|---|---|---|

| ARC2 Proxy | 186.04 μs | - | 164887 |

| NGINX | 177.44 μs | 4.6227% | 164888 |

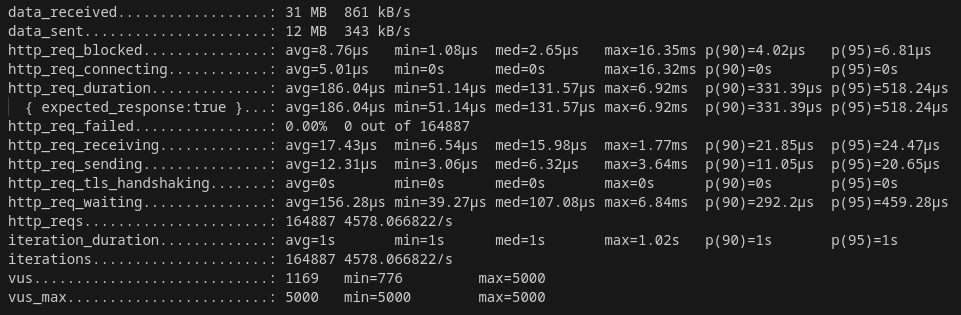

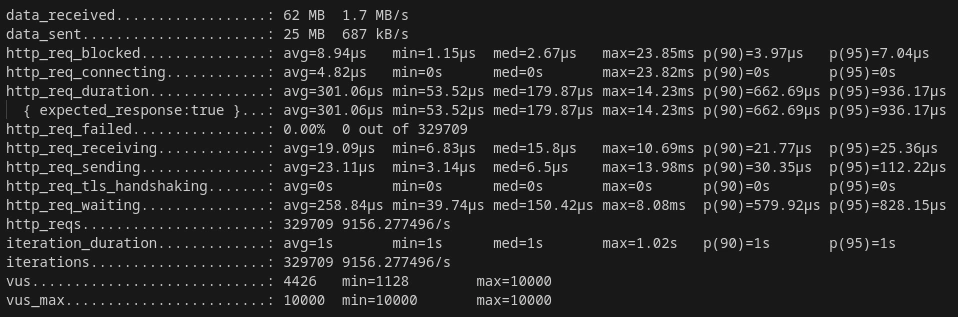

For 10,000 VUS

NGINX

ARC2 Proxy

Reference Table

| Program | Request Latency | Delta | Total Requests |

|---|---|---|---|

| ARC2 Proxy | 301.06 μs | 3.0652% | 329709 |

| NGINX | 310.58 μs | - | 329571 |

For 15,000 VUS

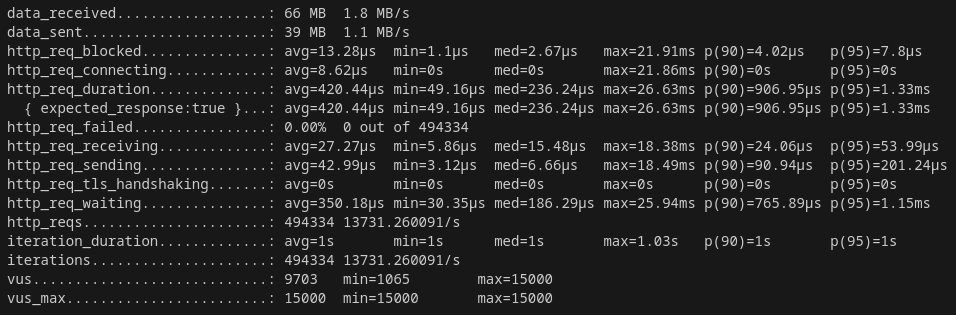

NGINX

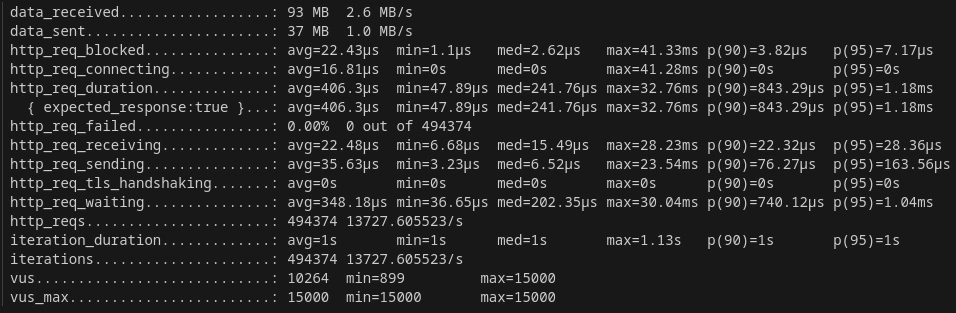

ARC2 Proxy

Reference Table

| Program | Request Latency | Delta | Total Requests |

|---|---|---|---|

| ARC2 Proxy | 406.3 μs | 3.3631% | 494374 |

| NGINX | 420.44 μs | - | 494334 |

For 20,000 VUS

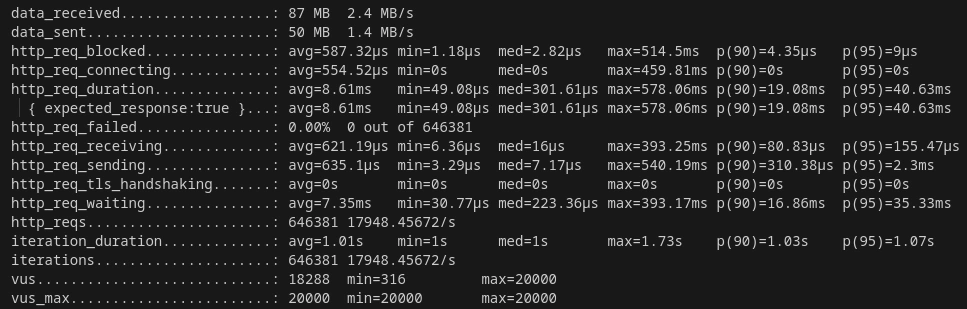

NGINX

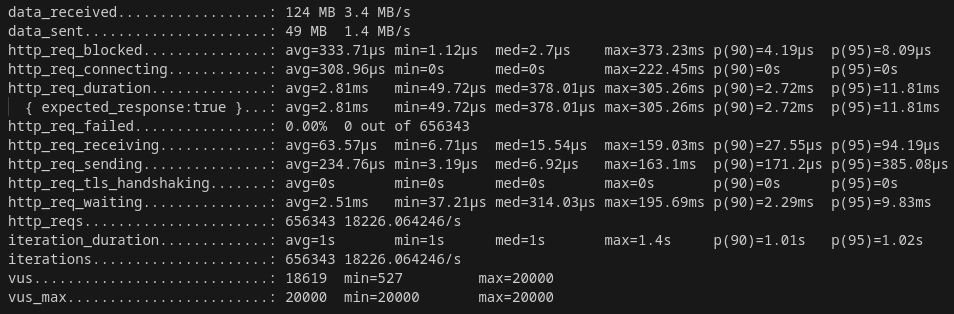

ARC2 Proxy

Reference Table

| Program | Request Latency | Delta | Total Requests |

|---|---|---|---|

| ARC2 Proxy | 2.81 ms | 306.4057% | 656343 |

| NGINX | 8.61 ms | - | 646381 |

Conclusions

Analysis

- Scalability: ARC2 Proxy demonstrated better scalability, maintaining lower latency as the number of requests increased. The substantial difference in latency during the maximum load test highlights ARC2 Proxy's ability to handle high traffic efficiently.

- Consistency: ARC2 Proxy provided more consistent performance across different load levels. Except for the moderate load test where NGINX had a slight advantage, ARC2 Proxy consistently showed lower latency.

- Resource Utilization: The similar number of total requests handled by both proxies suggests that resource allocation was comparable. However, the differences in latency imply that ARC2 Proxy utilizes system resources more effectively to process requests faster. During the tests a bit lower CPU utilization was observed for ARC2 Proxy.

Implications

- Performance Optimization: For applications where low latency is critical, especially under heavy load, ARC2 Proxy appears to be the superior choice. Its ability to maintain lower response times can enhance user experience and reduce server strain.

- High-Traffic Environments: In scenarios involving a large number of concurrent users or requests, ARC2 Proxy's scalability offers a significant advantage. It can handle spikes in traffic without a proportional increase in latency, making it suitable for high-availability services.

- Efficiency in Caching: Since both proxies were tested on serving cached responses, ARC2 Proxy's performance indicates a more efficient caching mechanism or better handling of cached data retrieval. Specifically it uses in-memory caching for repeated requests.

The benchmarking results indicate that ARC2 Proxy generally outperforms NGINX in serving cached responses, particularly under high load conditions. Its lower latency and consistent performance make it a compelling option for applications requiring efficient request handling and scalability. While NGINX remains a robust and widely-used proxy server, ARC2 Proxy's advantages in this specific use case suggest that it may be better suited for environments where performance and scalability are paramount.

By understanding the strengths of each proxy, developers and system administrators can make informed decisions that align with their application's requirements and performance goals.